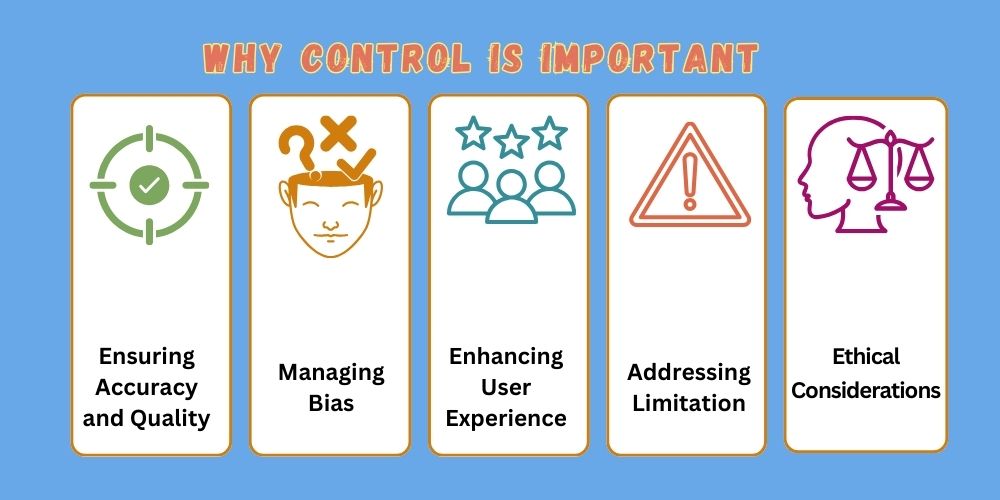

Controlling the Output of Generative AI systems is crucial. It ensures that the technology serves us safely and effectively.

Generative AI systems are all about creating new content, whether text, images, or music. There’s a lot of potential there, but we must recognize the risks involved. If we don’t monitor these systems, they might end up creating harmful or misleading content. Monitoring their output really helps ensure they stick to ethical standards and get things right. It helps keep users safe from misinformation and bias.

In this blog post, you’ll discover why it’s so important to monitor the output of generative AI systems, including the perks and possible risks. By understanding these aspects, we can use AI responsibly and tap into its full potential for good.

Controlling the output of Generative AI & Potential Risks

Generative AI systems possess a lot of potential. Sure, they have benefits, but some risks are involved, too. Understanding these risks is super important for using AI safely. Let’s take a look at some possible risks associated with generative AI.

Misinformation Spread

Generative AI can create content that looks real. It’s pretty simple for misinformation to spread like wildfire. Fake news, altered images, and deepfake videos can really throw people off. It’s incredible how fast inaccurate information can spread.

Here are a few examples in the table below:

| Type of Misinformation | Potential Impact |

|---|---|

| Fake News | Political unrest, social panic |

| Altered Images | Damage reputations, public trust |

| Deepfake Videos | Identity theft, fraud |

These examples show how serious misinformation can be. It is important to control AI outputs to reduce these risks.

Controlling the Output of Generative AI Ethical Concerns

AI ethics is a topic of much discussion. When it comes to generative AI systems, there are numerous ethical issues. It’s possible that these systems will generate biased content. In fact, the data utilized to train the AI may be the source of this prejudice.

Here are a few essential ethical concerns:

- Bias and discrimination

- Privacy violations

- Unintended consequences

People may be mistreated as a result of bias. If AI generates content with personal data, privacy violations can occur. Sometimes, using AI in the wrong way can lead to unexpected problems.

Addressing these ethical concerns is crucial. It ensures AI benefits everyone without causing harm.

Controlling the Output of Generative AI (Safety Measures)

It’s really important to monitor how generative AI systems produce their output. This ensures that everything is safe and dependable. We’re looking at implementing safety measures to prevent harmful or biased content from being exposed. These measures help build trust and ensure the integrity of AI outputs.

Content Filtering

Keeping content filtered is vital for ensuring it stays high-quality and suitable. It’s all about checking for and getting rid of any content that’s inappropriate or harmful. This might involve some strong language, violent themes, or adult content.

- Find keywords that aren’t suitable.

- Use AI to detect harmful phrases.

- Set rules for content approval.

Filtering helps protect users from offensive content and ensures that the AI’s output matches the community’s expectations.

Bias Mitigation

Bias mitigation helps ensure that AI outputs are fair and free from bias. Generative AI may sometimes replicate or even increase existing biases, which might result in unfair or biased outcomes.

- Identify biased patterns in AI outputs.

- Adjust algorithms to reduce bias.

- Regularly review and update bias controls.

Reducing bias helps create a more equal and fair environment. It’s all about building reliable AI systems.

Controlling the output of Generative AI & Legal Implications

Managing how generative AI systems produce results, in fact, has significant legal implications. These advanced technologies can create content that may break laws. So, it’s essential to keep this in mind. So, making sure these systems are compliant is vital to steering clear of any legal issues.

Regulatory Frameworks for Controlling the output of Generative AI

Different countries have their own rules and regulations regarding AI systems. These frameworks help ensure that AI outputs stay within the bounds of local laws. For example, certain areas have really tough regulations regarding hate speech, fake news, and privacy breaches. AI systems need to follow these rules to avoid any penalties.

Getting a grip on the local rules and regulations is really important. It’s vital for companies to keep up with changes in these laws and make sure their AI systems are in line with them. This helps them avoid legal troubles and keep their reputation in good shape.

Compliance Requirements for Controlling the output of Generative AI

Another crucial element is fulfilling compliance regulations. It’s vital to check AI outputs and verify for compliance before making it public. This means ensuring the content does not infringe on copyrights or intellectual property rights. It’s also about ensuring that AI’s content keeps user privacy in check.

There are various methods to ensure compliance:

- Regular audits of AI outputs

- Implementing strict content filters

- Training AI models with ethical guidelines

Companies need to keep track of how they’re meeting compliance requirements. This documentation can be helpful for the company if it faces legal scrutiny. It shows that the company is serious about sticking to the law.

Controlling the output of Generative AI Technological Solutions

It’s really important to keep an eye on how generative AI systems produce their output. It makes sure that AI tools create content that’s both useful and safe. Tech solutions help in handling these outputs. How about we dive into some of these solutions together?

Advanced Algorithms

Advanced algorithms are great for monitoring and managing AI output. They have some pretty intricate rules for filtering and tweaking the content generated. These algorithms are designed to spot harmful or inappropriate content so they make the necessary changes.

Here are some features of advanced algorithms:

- Content Filtering: Removes harmful or offensive text.

- Language Processing: Understands context and meaning.

- Pattern Recognition: Identifies repeated issues or errors.

Ai Training Protocols

AI training protocols help ensure that generative AI systems learn correctly. These protocols show us the way AI models get trained. This process makes AI outputs better.

Some important parts of AI training protocols are:

- Data Quality: It’s all about using top-notch and varied data sets

- Ethical Standards: It’s essential to stick to ethical guidelines while training.

- Regular Updates: Always updating and fine-tuning AI models.

These protocols are crucial. They make sure that AI systems create content that’s both reliable and safe.

Case Studies

It’s essential to stay informed about how generative AI systems produce their output. Real-world examples highlight how important this is. These examples show why we need to keep an eye on things and have some control in place. They also teach some really valuable lessons.

Real-world Incidents

There have been a few incidents because of AI outputs that weren’t properly monitored. These situations are great reminders and chances to learn.

| Incident | Description | Impact |

|---|---|---|

| Fake News Generation | AI created and spread false news articles. | Public misinformation and panic. |

| Offensive Content | AI generated hate speech in a chat system. | User distress and system shutdown. |

| Biased Outcomes | AI showed bias in recruitment processes. | Discrimination and unfair hiring practices. |

Lessons Learned

We’ve learned a few important lessons from these incidents:

- Monitoring: Keeping an eye on AI outputs all the time is really important.

- Human-in-the-loop: Negative effects can be avoided by human involvement.

- Ethical guidelines: It’s essential to set up and stick to solid ethical standards.

- Training Data: When training data, it’s essential to use a mix of diverse and unbiased sources.

- Transparency: Ensure that AI decision-making procedures are transparent and easy to comprehend.

These lessons help improve AI systems’ safety and reliability. They also guide future developments in AI technology.

Future Prospects

For a secure future, generative AI systems’ output must be managed. AI is still developing, and its influence on our daily lives is increasing. For society to advance, safe and responsible AI use is essential.

Innovations In Ai Safety

Innovations in AI safety are crucial for upcoming technology. To keep an eye on AI behavior, developers employ sophisticated methods. These methods guarantee AI serves humans and help avoid negative consequences.

Some key innovations include:

- AI alignment research: It is all about ensuring that the goals of AI align with human values.

- Robustness testing: AI systems resistant to unexpected inputs are known as robustness testing.

- Transparency tools: These tools help make AI decision-making more transparent for users.

These innovations really help in building trust in AI systems. They help to lower the risks that come with AI advancements.

Long-term Impacts

Understanding the long-term effects of AI on humans is vitally important. Controlled AI could help build a safer, more prosperous future and improve education, healthcare, and banking industries.

Consider these potential long-term impacts:

| Industry | Potential Impact |

|---|---|

| Healthcare | Personalized treatments and early disease detection. |

| Finance | Better fraud detection and risk management. |

| Education | Customized learning experiences and improved accessibility. |

By ensuring AI operates safely, we can harness its potential. This leads to a more secure and beneficial technological future.

Conclusion

Controlling the output of generative AI systems is critical for various reasons. It assures ethical use and protects people from harm. It ensures the quality and correctness of the content provided. This regulation helps to keep misinformation from spreading. It also protects sensitive data and privacy.

By regulating AI output, we can responsibly reap its benefits. This results in a more secure, trustworthy digital world for everyone. Let us prioritize sensible AI regulation for a better future.

Frequently Asked Questions

What Is Generative AI?

Generative AI uses algorithms to create fresh content, whether writing, images, or music.

Why Is Controlling the output of Generative AI Crucial?

Uncontrolled AI output can lead to misinformation, bias, and harmful content.

How Does AI Bias Occur?

AI bias happens when the training data is unbalanced or reflects human prejudices.

Can Generative AI Be Harmful?

Yes, it can spread false information, create fake identities, or produce inappropriate content.

What Is The Role Of Ethics In AI?

Ethics ensure AI systems are fair and transparent and respect human rights.

How Can AI Output Be Controlled?

Using filters, human oversight, and ethical guidelines helps manage AI output.

Why Is Transparency Important In Ai?

Transparency enables users to understand AI decisions and builds trust.

What Are The Challenges In Controlling AI?

Challenges include bias, data privacy, and maintaining creative freedom.

Can Ai Create Fake News?

Yes, AI can generate realistic fake news, making it hard to distinguish from real news.

What Is Responsible AI Use?

Responsible AI use means ensuring AI benefits society without causing harm.

Wonderful article! Clear and actionable information.

This article is incredibly well-written and insightful! I learned so much and appreciate the depth of research you’ve put into it.

I love how clearly you explain the topic—it made something complex feel so approachable

Loved this post! Very helpful and well-explained.

Really enjoyed this! Informative and well-written.

This blog brilliantly explains why controlling the output of generative AI systems is so important. It’s easy to follow and highlights key points about safety and responsibility. If you’re curious about how AI shapes our world and why it needs guidance, this blog is definitely worth your time!